The wearable remembrance agent

A system for augmented memory

(click

for RA distribution page)

(click for compressed postscript version of this paper)

Bradley J. Rhodes

MIT Media Lab, E15-305

20 Ames St.

Cambridge, MA 02139

rhodes@media.mit.edu

Published in The Proceedings of The First International Symposium on

Wearable Computers (ISWC '97), Cambridge, Mass, October 1997,

pp. 123-128.

Abstract

This paper describes the wearable Remembrance Agent, a continuously

running proactive memory aid that uses the physical context of a wearable

computer to provide notes that might be relevant in that context. A

currently running prototype is described, along with future directions for

research inspired by using the prototype.

Introduction

With computer chips getting smaller and cheaper the day will soon come when

the desk-top, lap-top, and palm-top computer will all disappear into a vest

pocket, wallet, shoe, or anywhere else a spare centimeter or two are

available. As the price continues to plummet, these devices will enable

all kinds of applications, from consumer electronics to personal

communicators to field-operations support. Given that the primary use of

today's palm-top computers is as day-planners, address books, and

notebooks, one can expect memory aids will be an important application for

wearable computers as well.

Current computer-based memory aids are written to make life easier for the

computer, not for the person using them. For example, the two most

available methods for accessing computer data is through filenames (forcing

the user to recall the name of the file), and browsing (forcing the user to

scan a list and recognize the name of the file). Both these methods are

easy to program but require the user to do the brunt of the memory task

themselves. Hierarchical directories or structured data such as calendar

programs help only if the data itself is very structured, and break down

whenever a file or a query doesn't fit into the redesigned structure.

Similarly, key-word searches only work if the user can think of a set of

words that uniquely identifies what is being searched for.

Human memory does not operate in a vacuum of query-response pairs. On the

contrary, the context of a remembered episode provides lots of cues for

recall later. These cues include the physical location of an event, who

was there, what was happening at the same time, and what happened

immediately before and after (Tulving 83). This information both helps

us recall events given a partial context, and to associate our current

environment with past experiences that might be related.

Until recently, computers have only had access to a user's current context

within a computational task, but not outside of that environment. For

example, a word-processor has access to the words currently typed, and

perhaps files previously viewed. However, it has no way of knowing where

its user is, whether she is alone or with someone, whether she is thinking

or talking or reading, etc. Wearable computers give the opportunity to

bring new sensors and technology into everyday life, such that these pieces

of physical context information can be used by our wearable computers to

aid our memory using the same information humans do.

This paper will start by describing features available in wearable

computers that are not available in current laptops or Personal Digital

Assistants (PDAs). It will then show how these features are being

exploited by describing the latest version of the Remembrance Agent (or

RA), a wearable memory aid that continually reminds the wearer of

potentially relevant information based on the wearer's current physical and

virtual context. Finally, it will discuss related work and extensions that

are being added to the current prototype system.

Wearable computers vs. PDAs

The fuzzy definition of a wearable computer is that it's a computer that is

always with you, is comfortable and easy to keep and use, and is as

unobtrusive as clothing. However, this "smart clothing" definition is

unsatisfactory when pushed in the details. Most importantly, it doesn't

convey how a wearable computer is any different from a very small palm-top.

A more specific definition is that wearable computers have many of the

following characteristics:

- Portable while operational: The most distinguishing feature of a

wearable is that it can be used while walking or otherwise moving around.

This distinguishes wearables from both desktop and laptop computers.

- Hands-free use: Military and industrial applications for wearables

especially emphasize their hands-free aspect, and concentrate on speech

input and heads-up display or voice output. Other wearables might also use

chording-keyboards, dials, and joysticks to minimize the tying up of a

user's hands.

- Sensors: In addition to user-inputs, a wearable should have

sensors for the physical environment. Such sensors might include wireless

communications, GPS, cameras, or microphones.

- "Proactive": A wearable should be able to convey information to

its user even when not actively being used. For example, if your computer

wants to let you know you have new email and who it's from, it should be

able to communicate this information to you immediately.

- Always on: By default a wearable is always on and working,

sensing, and acting. This is opposed to the normal use of pen-based PDAs,

which normally sit in one's pocket and are only woken up when a task needs

to be done.

The Remembrance Agent

The Remembrance Agent is a program that continuously ``watches over the

shoulder'' of the wearer of a wearable computer and displays one-line

summaries of notes-files, old email, papers, and other text information

that might be relevant to the user's current context. These summaries are

listed in the bottom few lines of a heads-up display, so the wearer can

read the information with a quick glance. To retrieve the whole text

described in a summary line, the wearer hits a quick chord on a chording

keyboard.

The Desktop RA

An earlier desktop version of the RA is described in (Rhodes & Starner 96). This

previous version suggests old email, papers, or other text documents that

are relevant to whatever file is currently being written or read in a

word-processor. The system has been in daily use for over a year now, and

the suggestions it produces are often quite useful. For example, several

researchers have indexed journal abstracts from the past several years, and

use the RA to suggest references to papers they are currently writing. The

system has also been used to keep track of email threads, by recommending

old email relevant to current email being read.

The Wearable RA

When the system was ported to a wearable computer, new applications became

apparent. For example, when taking notes at conference talks the

remembrance agent will often suggest document that lead to questions for

the speaker. Because the wearable is taken everywhere, the RA can also

offer suggestions based on notes taken during coffee breaks, where laptop

computers can not normally be used. Another advantage is that because the

display is proactive, the wearer does not need to expect a suggestion in

order to receive it. One common practice among the wearables users at

conferences is to type in the name of every person met while shaking hands.

The RA will occasionally remind the wearer that the person who's name was

entered has actually been met before, and can even suggest the notes taken

from that previous conversation.

While useful, the system described above does not go far enough in using

the physical sensors that could be integrated into a wearable computer.

For example, a wearer of the system should not have to type in the name of

every person met at a conference. Instead, the wearable should

automatically know who is being spoken to, through active badge systems or

eventually through automatic face-recognition. Similarly, the wearable

should know its own physical location through GPS or an indoor location

sensor.

When available, this automatically detected physical context is used by the

new RA to help determine relevant information. This context information is

used both in indexing and in later suggestion-mode. In indexing, notes

taken on the wearable are tagged with context information and stored for

later retrieval. In suggestion-mode, the wearer's current physical context

is used to find relevant information. If sensor data is not available (for

example if no active-badge system is in use) the wearer can still type in

additional context information. The current version of the RA uses five

context cues to produce relevant suggestions:

- Wearer's physical location. This information could provided by GPS,

an indoor location system, or a location entered explicitly by the user on

the chording keyboard.

- People who are currently around. This information can come from an

active badge system, another person's wearable computer, or again can be

entered by the wearer.

- Subject field, which can be entered by the wearer as an extra tag, or

in indexing can be extracted from header fields such as the subject line in

email.

- Date and time-stamp. These can be stamped onto note files with a

single chord on the keyboard, or can be extracted from more structured

data. In retrieval, this information comes from the system clock.

- The information itself (the body of the note), which is turned into a

word-vector for later keyword analysis. In retrieval, this information

comes from whatever the wearer is currently reading or writing on the

heads-up display.

An example scenario makes the interaction of these context cues more

apparent. Say the wearer of the RA system is a student heading to a

history class. When she enters the classroom, note files that had

previously been entered in that same classroom at the same time of day will

start to appear. These notes will likely be related to the current course.

When she starts to take notes on Egyptian Hieroglyphics, the text of her

notes will trigger suggestions pointing to other readings and note files on

Egyptology. These suggestions can be biased to favor hieroglyphics in

particular by setting the subject field to "hieroglyphics." When she

later gets out of class and runs into a fellow student, the identity of the

student is either entered explicitly or conveyed through an active badge

system. The RA starts to bring up suggestions pointing to notes entered

while around this person, including an idea for a project proposal that

both students were working on. Finally, the internal clock of the wearable

gets close to the time of a calendar entry reminding the wearer of a

meeting, and the RA brings up pointers to that entry to remind her that she

should be on her way.

Internals

Indexing documents

When a note is written down, the location, person, subject, and date tags

are automatically attached to the note if they are available. If one of

the four context tags are not available (for example, if no active badge is

being used), then the writer of the note can enter the field by hand using

the chording keyboard. The fields can also be left blank. New tags can be

added easily, and it it hoped that as new sensors become available they can

be integrated into the existing system and replace the by-hand entry.

Sometimes these context fields can also be determined from a source other

than physical sensors. For example, subject and person tags can

automatically be extracted from email header information. In this case,

the person field is the person who sent the email, not the person who

happens to be with you when you read the email. If an indexed file is a

structured source such as email or HTML, this is detected and the RA

automatically picks out any information it can from the file. Multiple

notes or documents can also be detected and broken out of a single file, as

would be the case for an email archive file.

After being tagged, these notes, email, and other information sources are

indexed by the back end. Common words in the body of a note file are

thrown out, and the remaining words are stemmed and converted into a

word-vector where the number of occurrences of each word in the indexed

document is an element in the vector. Large files are broken up into

smaller overlapping "windows" so the topic of a single vector will tend

to remain focused. This window information is used later in the suggestion

mode to jump to the most relevant part of a suggested document when it's

brought up for full viewing. The indexing method used here is the same

used for the previous version of the RA, and is based on the SMART

algorithm described in (Salton 71). After indexing the body, the

location, person, date, and subject information are encoded as typed

tokenized words, and are merged into the vector.

Suggestion mode

When running in its normal suggestion mode, the RA will convert the current

screen-full of text being looked at into a word vector, in the same way

vectors are created during indexing. The current location, person, date,

and subject context information is also merged into this ``query vector,''

but the vector can be weighted more or less heavily in favor of each of

these context cues or in favor of the main body-vector.

The RA will then compare the query vector with each of the indexed vectors.

In the case of discrete information (word, person, subject, and

room-location information), the similarity is found by taking the dot

product of the two vectors. The larger the dot-product, the more relevant

that particular document is to the query vector. In the case of vectors of

continuous features (date and GPS coordinates), the distance between the

closest two elements is used to create a weighted relevance. The

similarities between vectors are used to produce a weighted sum, and the

most relevant documents are summarized. The summary lines are displayed

continuously on the bottom few lines of the heads-up display, as shown in

Figure 1. When the context is changing, suggestions are updated every five seconds.

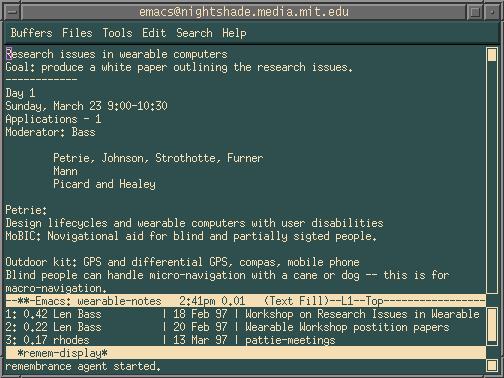

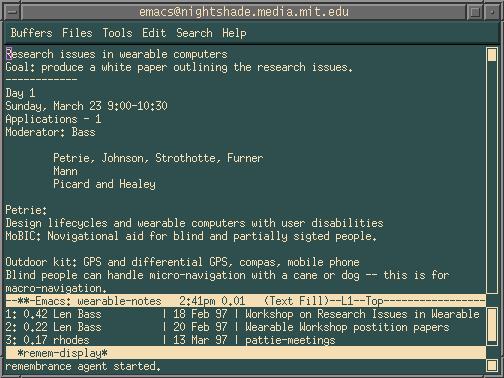

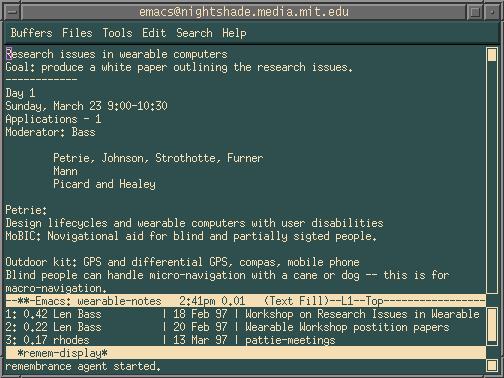

- Figure 1: A screen-shot of the wearable RA. The main screen is notes being

taken during a workshop on wearable computing, the bottom shows the RA's

suggestions. Suggestions (1) and (2) are both from email, so the person

field is automatically filled in from the ``from'' field. In the third

entry, person information was not available so it defaulted to the owner of

the file.

Sometimes a suggestion summary line can be enough to jog a memory, with no

further lookup necessary. However, often it is desirable to look up the

complete reference being summarized. In these cases, a single chord can be

hit on the chording keyboard to bring up any of the suggested references in

the main buffer. If the suggested file is large, the RA will automatically

jump to the most relevant point in the file before displaying it.

Explicit Query

While the RA is designed to be a proactive reminder system, it also

provides the functionality of a standard fuzzy-query database engine. By

entering a query at an emacs prompt, the wearer can override all current

context cues with the new input.

Hardware

The RA is composed of a front end written in emacs lisp and a back-end

written in C, and runs on most UNIX platforms. It is currently running

under Linux on a wearable 100MHz 486 based on the ``Tin Lizzy'' design

developed by Thad Starner (Starner 95). The input device is a

one-handed chording keyboard called the Twiddler (made by Handykey), with

which one can reach typing speeds of 30-50 words per minute on a

full-function keyboard. The output device is a Private Eye heads-up

display, a 720X280 monochrome display, which produces a crisp 80X25

character screen. The display is currently worn as a ``hat top computer,''

with the viewscreen pointing down from the top right corner of the wearer's

field of view (see Figure \ref{fig:brad.wearable.eps}). This mount

position gives the wearer the ability to make full eye-contact while still

allowing access to a full screen of information with a single glance.

Others in the wearables group at the Media Lab have experimented with

eyeglass-mounts that provide an overlay effect of text or graphics on the

real world (Starner et al 95).

- Figure 2: The heads-up display for the wearable platform.

Related Work

Probably the closest system to this work is the Forget-me-not system

developed at The Rank Xerox Research Center (Lamming 94). The

Forget-me-not is a PDA system that records where it's user is, who they are

with, who they phone, and other such autobiographical information and

stores it in a database for later query. It differs from the RA in that

the RA looks at and retrieves specific textual information (rather than

just a diary of events), and the RA has the capacity to be proactive in its

suggestions as well as answer queries.

Several systems also exist to provide contextual cues for managing

information on a traditional desktop system. For example, the Lifestreams

project provides a complete file management system based on time-stamp

(Freeman 96). It also provides the ability to tag future events,

such as meeting times, that trigger alarms shortly before they occur.

Finally, several systems exist to recommend web-pages based on the

pages a user is currently browsing (Lieberman 95, Armstrong 95).

Design issues and future work

The physical-based tags are a recent extension to the RA, but the base

system has been up and running on the wearable platform for several months,

and several design issues are already apparent from using this prototype.

These issues will help drive the next set of revisions.

The biggest design trade-off with the RA is between making continuous

suggestions versus only occasionally flashing suggestions in a more

obtrusive way. The continuous display was designed to be as tolerant of

false positives as possible, and to distract the wearer from the real world

as little as possible. The continuous display also allows the wearer to

receive a new suggestion literally in the blink of an eye rather than

having to fumble with a keyboard or button. However, because suggestions

are displayed even when no especially relevant suggestions are available,

the wearer has a tendency to distrust the display, and after a few weeks of

use our limited experience suggests that the wearer tends to ignore the

display except when they are looking at the screen anyway, or when they

already realize that a suggestion might be available. The next version of

the RA will cull low-relevancy hits entirely from the display, leaving a

variable-length display with more trustworthy suggestions.

Furthermore, notifications that are judged to be too important to miss (for

example, notification that a scheduled event is about to happen) will be

accompanied by a "visual bell" that flashes the screen several times.

This flashing is already being used in a wearable communications system on

the current heads-up mounted display, and has been satisfactory in getting

the wearer's attention in most cases. Another lesson learned from the

interface for this communications system is that the screen should

radically change when an important message is available. This way the

wearer need not read any text to see if there is an important alert.

Currently, the communications system prints a large reverse-video line

across the lower half of the screen, which is used to quickly determine

if a message has arrived.

Another trade-off has been made between showing lots of text on the screen

versus showing only the most important text in large fonts. The current

design shows an entire 80 column by 25 row screen, but this often produces

too much text for a wearer to scan while still trying to carry on a

conversation. Future versions will experiment with variable font size and

animated typography (Small 94).

Acknowledgments

I'd like to thank Jan Nelson, who coded most of the Remembrance Agent

back-end, and my sponsors at British Telecom.

References

R. Armstrong, D. Freitag, T. Joachims, and T. Mitchell, 1995. WebWatcher:

A Learning Apprentice for the World Wide Web, in AAAI Spring Symposium on

Information Gathering, Stanford, CA, March 1995.

E. Freeman and D. Gelernter, March 1996. Lifestreams: A storage model for

personal data. In ACM SIGMOD Bulletin.

M. Lamming and M. Flynn, 1994. "

Forget-me-not:" Intimate Computing in

Support of Human Memory. In Proceedings of FRIEND21, '94 International

Symposium on Next Generation Human Interface, Meguro Gajoen, Japan.

H. Lieberman, Letizia: An Agent That Assists Web Browsing, International

Joint Conference on Artificial Intelligence, Montreal, August 1995.

B. Rhodes and T. Starner, 1996. "Remembrance

Agent: A continuously running automated information retreival system".

In Proceedings of Practical Applications of Intelligent Agents and

Multi-Agent Technology (PAAM '96), London, UK.

G. Salton, ed. 1971. The SMART Retrieval System -- Experiments in Automatic

Document Processing. Englewood Cliffs, NJ: Prentice-Hall, Inc.

D. Small, S. Ishizaki, and M. Cooper, 1994. Typographic space. In CHI '94

Companion.

T. Starner, S. Mann, and B. Rhodes, 1995. The MIT wearable computing web

page. http://wearables.www.media.mit.edu/projects/wearables/.

T. Starner, S. Mann, B. Rhodes, J. Healey, K. Russell, J. Levine, and

A. Pentland, 1995. Wearable Computing and Augmented Reality, Technical

Report, Media Lab Vision and Modeling Group RT-355, MIT

E. Tulving, 1983. Elements of episodic memory. Clarandon Press.